Impossible Mission (legally distinct from its extremely obvious permutation) was one of the more popular games on Commodore 64, and I think it still has its charm. I think the mechanics like basing floor detection on the position of your foot, and the unadjustable front-flip jump, make for an interesting game design study.

I missed out on the famous original and grew up with Impossible Mission II on Amiga, released in 1988. The gameplay is quite similar, and I think it's a bit of a hidden gem, especially given how few people in my demographic (millenials in North America) have heard of Impossible Mission or have even played a game on C64 or Amiga.

So, as any reasonable enjoyer of a video game does, I started to reverse engineer it.

The disassembly

I used Aira Force to disassemble the binary and, surprisingly, was able to reassemble and boot the game without needing to fix too many things.

My first goal was to map out some of the functions used for starting up the game, because if I'm going to be doing a lot of debugging, I want to be able to boot into the game as quickly as possible.

Thankfully, the boot process is extremely linear. It was easy to find and disable lots of functions that pertain to running the game's intro sequence. Soon, the game was booting straight into gameplay.

Here's a (partially) mapped excerpt from the end of the boot process. pl_update is an infinite loop that returns only when the game ends, sending us back to .newgame for another go.

.newgame: jsr unk_build_world(pc)

jsr clear_inputs

jsr sub_19170

jsr setup_clear_tape_data

jsr setup_load_tower

jsr setup_init_player_vars

jsr sub_12fa6

jsr do_room_trans_fade

jsr sub_1a1ae

jsr setup_spawn_player

move.w #$0001,TimerActive

jsr pl_update

bra.s .newgame

unlk a5

rts

.file1: dc.b "hang/wh_d",0

.file2: dc.b "hang/cho3_d.k2",0

.file3: dc.b "game",0

.playsaved: dc.b "play a saved game?",0

.spaces: dc.b " ",0There are some strings at the bottom of the function. Whatever C compiler they used (most of IM2 was written in C), any string literals written as function parameters are placed at the bottom of the function like this, where it's easy to retrieve a PC-relative pointer to them. (PC-relative means referencing data relative to the program counter, or the current spot in execution, which is more efficient than using 32-bit absolute pointers.)

The file compression

Of course, part of the reverse-engineering effort involves mapping and understanding the data files, not just the executable. I think it would be really cool to eventually add custom rooms/levels to the game.

The first thing I noticed was that even small files take a really long time to load. Each 10kb file would take 1-2 seconds to load; well, the disk activity was instantaneous, but it would be another second of silence before the file was "done" loading. Seemed likely that there was some kind of decompression going on.

Here's what I found:

file_unpack:

; a2 = pointer to source file

movea.l FileSourcePtr,a2

clr.l d2

; Get first 2 bytes from source, which is destination file size

move.w (a2)+,d2

move.l d2,FileDestSize

; Set up d2 as a loop counter equal to the file size

subq.w #1,d2

; a2 = Source+2, a0 = Source+16

movea.l a2,a0

adda.l #$0000000e,a0

; a1 = Destination pointer

movea.l FileDestPtr,a1

; Prep initial data

move.b #$01,d3

move.b (a0)+,d6

; Run decode_byte for every byte in the destination size

.0: bsr.s decode_byte

dbf d2,.0

rtsI added the comments myself after coming to understand this whole algorithm, and hopefully they'll help me explain it as we go.

We haven't gotten to the meat yet, but the above function sets up the source and destination pointers, and then runs decode_byte once for every byte in the final file. Basically, decode_byte will "decode" one byte of the destination file; this compression algorithm decodes one byte at a time.

I'm not going to nitpick code quality too hard, because it was written in the 80s. But goodness, it would have been an extremely simple optimization to just inline decode_byte rather than calling it as a function over and over again. That's Assembly 101 in C64 world. (These unpack functions were hand-written in Assembly, not compiled from C.)

Calling (and returning from) a subroutine costs about 34 cycles in total. This is happening for every byte in a 15+kb file. If you do the math, that's over 500,000 cycles wasted. In the best case, that's about 75ms, but I wouldn't be surprised if it could cost up to 150ms depending on what else the system is doing. Not for running the subroutine, just for calling it.

A 10-15% speed improvement is pretty dang good for such a small change to the code. But we'd be here all day if our goal was to fully optimize this routine.

Let's move on to decode_byte, shall we?

decode_byte:

; Get next 2 bits in compressed file

clr.w d4

bsr.s next_bit

bsr.s next_bit

; If not 00, enter dict lookup

tst.w d4

bne.s decode_byte_from_dict

; Otherwise, next 8 bits are a literal byte

bsr.s next_bit

bsr.s next_bit

bsr.s next_bit

bsr.s next_bit

bsr.s next_bit

bsr.s next_bit

bsr.s next_bit

bsr.s next_bit

; (Literal mode) Write next byte

move.b d4,(a1)+

rts

next_bit:

; d6 = 1-byte buffer from source file

roxl.b #1,d6

; d4 = current bits being read/processed

; (roxl shifts out of d6 into d4)

roxl.b #1,d4

; d3 = counter, have we read 8 bits out of buffer?

rol.b #1,d3

bcc.s .e

; If so, read next byte into buffer

move.b (a0)+,d6

.e: rts

decode_byte_from_dict:

; Dict lookup mode - get index based on 2-bit "size ID"

; d1 = index size

move.w d4,d1

clr.w d4

; Index size 1, get next 1 bit for dict index 0-1

bsr.s next_bit

subq.w #1,d1

beq.s .write

; Index size 2, get next 2 bits for dict index 2-5

bsr.s next_bit

subq.w #1,d1

beq.s .0

; Index size 3, get next 3 bits for dict index 6-13

bsr.s next_bit

addq.w #4,d4

.0: addq.w #2,d4

; Write byte from dict to destination

.write: move.b 0(a2,d4.w),(a1)+

rtsI'll summarize the way this function works:

- Get the next 2 bits in the source file, which denotes size of the dictionary index.

- If index size is 0, there's no dictionary match, so just read the next 8 bits from the source, which is our literal byte.

- Otherwise, get a byte from the dictionary based on index size:

- Index size 1 (1-bit index): Entries 0-1

- Index size 2 (2-bit index): Entries 2-5

- Index size 3 (3-bit index): Entries 6-13

I don't know much about compression algorithms, so I can't immediately identify this as an implementation of a well-known algorithm, but I think there are some cool things about it.

Basically, the dictionary contains what I assume are the most "popular" 14 bytes in the unpacked file. To compress the most popular byte, we'd have 010 in binary: 01 for a 1-bit index, then 0 for index 0.

So, the two most popular bytes get compressed down to 3 bits in every occurrence. Slightly less popular bytes will get compressed to 4 or 5 bits, depending on the index size. If we have 1011 in binary: 10 for a 2-bit index, then 11 for index 5. (It's 5 because the 2-bit index starts at 2; 0-1 are covered by the 1-bit index.)

The downside is that if our byte isn't in the dictionary, then it takes ten bits to encode: 00 for "literal mode", then the literal byte.

Anyway, now we have a better idea of why these files take so long to unpack. Not only are we in subroutine hell, but the compressed file isn't even byte-aligned, so we have no choice but to process it by shifting 1 bit at a time. Sure, there are plenty of code optimizations we could make, but it's clear that this algorithm wasn't built for fast unpacking. In fact, it almost feels more suited to 8-bit machines, which are good at shifting bits one by one.

What about second compression?

What if I told you...that once the file is unpacked, it gets unpacked again?

I don't know how common this was, but these folks decided to double up on their compression, putting their files through two separate algorithms. Funny thing is, the second unpack routine is far more efficient—there are no subroutines, and the data is even 16-bit aligned.

file_unpack_2:

; Set up pointers and get source file size

movea.l FileSourcePtr,a0

move.l FileSourceSize,d2

movea.l FileDestPtr,a1

suba.l a3,a3

; Prep d4 and d5 with "magic numbers" in file header

move.w (a0)+,d4

move.w (a0)+,d5

subq.l #4,d2

; Start loop

.loop: move.w (a0)+,d1

cmp.w d4,d1

beq.s .fillmode

cmp.w d5,d1

bne.s .literal

; Index mode (magic num in d5)

clr.l d0

move.w (a0)+,d0

move.w (a0)+,d3

adda.w d3,a3

movea.l a1,a2

suba.l d0,a2

.iloop: move.w (a2)+,(a1)+

subq.w #2,d3

bne.s .iloop

subq.l #6,d2

bra.s .next

; Fill mode (magic num in d4)

.fillmode: move.w (a0)+,d0

move.w (a0)+,d1

adda.w d1,a3

.floop: move.w d0,(a1)+

subq.w #2,d1

bne.s .floop

subq.l #6,d2

bra.s .next

; Literal mode no magic num match)

; Just write the word we took from source file

.literal: move.w d1,(a1)+

subq.l #2,d2

addq.l #2,a3

; Loop if there's more to do

.next: tst.l d2

bne.s .loop

; We're done, return destination size in d0

move.l a3,d0

rtsThis one is an LZ77 compression algorithm! LZ77 is the basis for many modern compression formats that we use today. This is a word-aligned version of the algorithm that seems written specifically for being efficient on 16-bit hardware.

The staple feature of LZ77 is sliding-window compression. As the data is getting unpacked, it can look back at previously-unpacked data and copy it.

Here is a summary:

- Get the next 2 bytes from the source file.

- Check if it's a magic number:

- Magic number 1: Fill mode, get next 4 bytes as parameters

- Magic number 2: Index mode, get next 4 bytes as parameters

- No magic number: Literal, just write bytes to destination

- Perform a copy based on the mode:

- Fill mode: Fill Y bytes of destination with 16-bit value X

- Index mode: Look back in destination X bytes, and copy Y bytes to current spot

- Loop if we haven't reached the end of the source file yet.

In the previous compression algorithm, every individual byte in the unpacked file had a representation in the packed file, each 3-10 bits in size. But here, a single operation can represent many bytes if that same pattern has appeared earlier in the file.

"Fill mode" is a standout feature that isn't part of LZ77. The file can provide a 16-bit value to fill some arbitrary amount of space with. This takes 6 bytes (2 for magic number, 2 for fill value, 2 for size).

Without "fill mode", we can do the exact same thing in 8 bytes using a literal and "index mode". (Write the literal, then index back 2 bytes and copy however much you want to fill.) Still, the inclusion of "fill mode" is slightly more efficient, and it's probably an easier job for the compression software to figure out when packing the file.

Another interesting observation: We don't even know the unpacked file size until we're done unpacking. But the game is only unpacking data structures of a predetermined size, so this isn't an issue for the game. Otherwise, I guess it would be included in the header, like in the previous compression format.

Results of the compression

Taking one of the game files, PECHARG.SQ, let's test the compression efficiency of the packers that were used for IM2. I'll also compare to some modern compression algorithms.

- Uncompressed: 29,112 bytes

- LZ77 (Original IM2 packer): 18,082 bytes

- LZ77 + Dictionary (Original IM2 packer): 13,602 bytes

- LZ4: 12,612 bytes

- ZX0: 9,596 bytes

- LZMA2: 8,556 bytes

Not bad! The 1988 packers are within reach of LZ4, but the clear winner is LZMA2 (7-Zip). Not that LZMA2 decompression is likely feasible to implement on such old hardware with any decent performance.

Given that, the most notable one here is ZX0. ZX0 is a modern compression algorithm based on LZ77, and it's optimized for 8-bit computers and other retro hardware. It's extremely fast to decompress, and it can do in-place decompression. That means you can unpack the file right on top of the packed file, without having to allocate memory for both separately (you just need to allocate enough for the unpacked file).

I don't have an exact benchmark, but some quick calculations tell me that ZX0 would decompress PECHARG.SQ in 150ms or less. That's at least a 6-10x speed improvement over the original unpacker(s), and at ~70% the file size.

Part of ZX0 is that while the decompression is extremely fast, the compression is not. Thankfully, we have modern CPUs to do the compressing, and I can compress PECHARG.SQ in about 500ms.

Even if they had ZX0 in 1988, it would be impossible to use. Fellow community member alpine9000 took the ZX0 compressor salvador and compiled it for Amiga, just to see how it would run. On a 14MHz 68020, it took about an hour to compress that same file. That's maybe okay, until I noticed that it took 88MB of RAM to do the job. Back then, the lucky ones had a 20MB hard disk, and not likely more than 1MB of RAM (maybe 4-8MB if they were really maxed out).

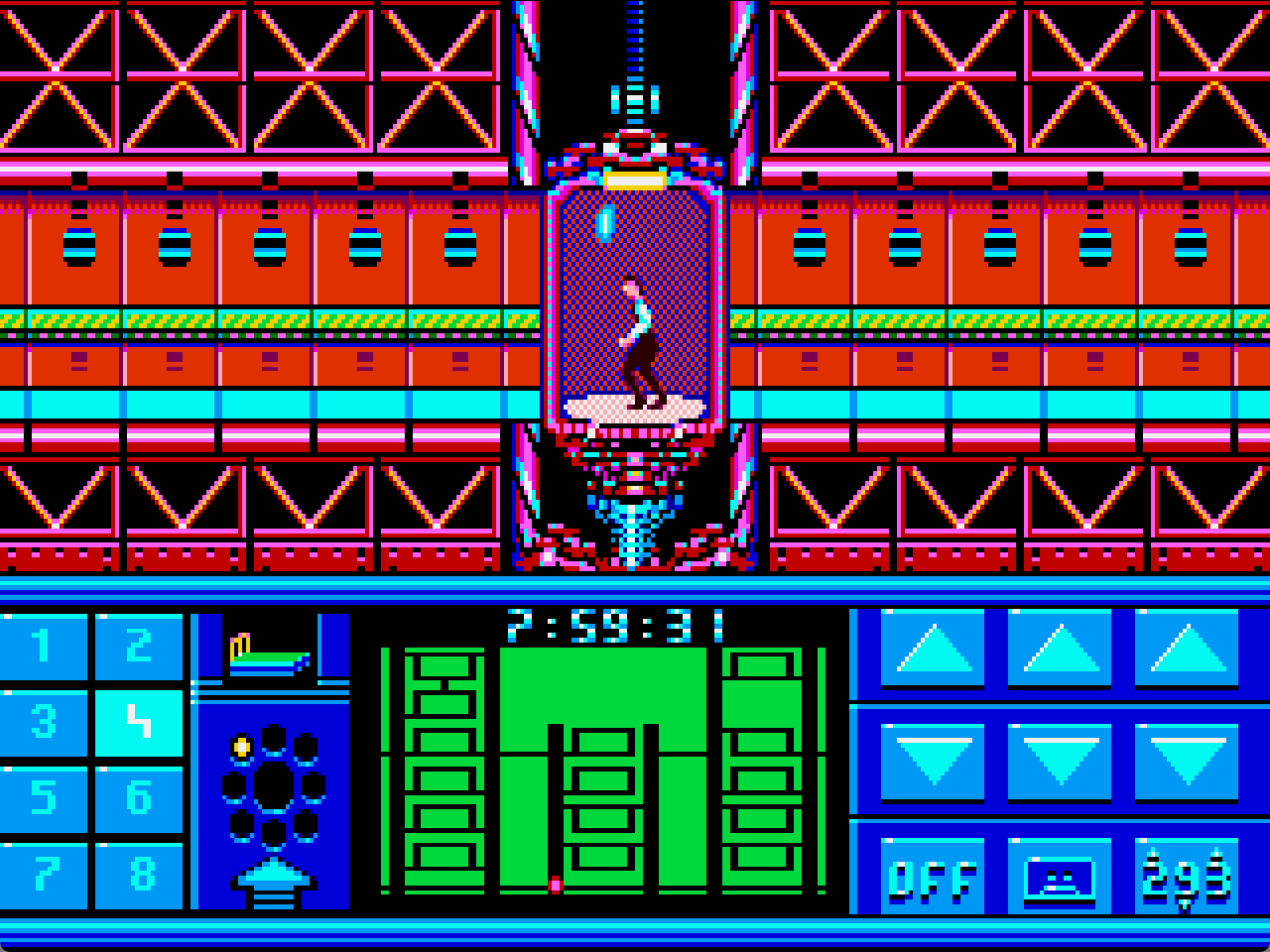

This is just one example of how we can use modern tools and knowledge to breathe new life into old hardware. With a format like ZX0, it becomes feasible to decompress assets practically in realtime, enabling new gameplay experiences that would not have been possible in 1988. In my own Amiga game, I'm doing lots of realtime asset decompression mid-gameplay, probably something that was hardly ever considered back then. For example, check out this effect I made, which takes advantage of ZX0's fast decompression.

If you have an idea of what the source software might be for the compression formats I found in IM2, I'd love to hear about it!